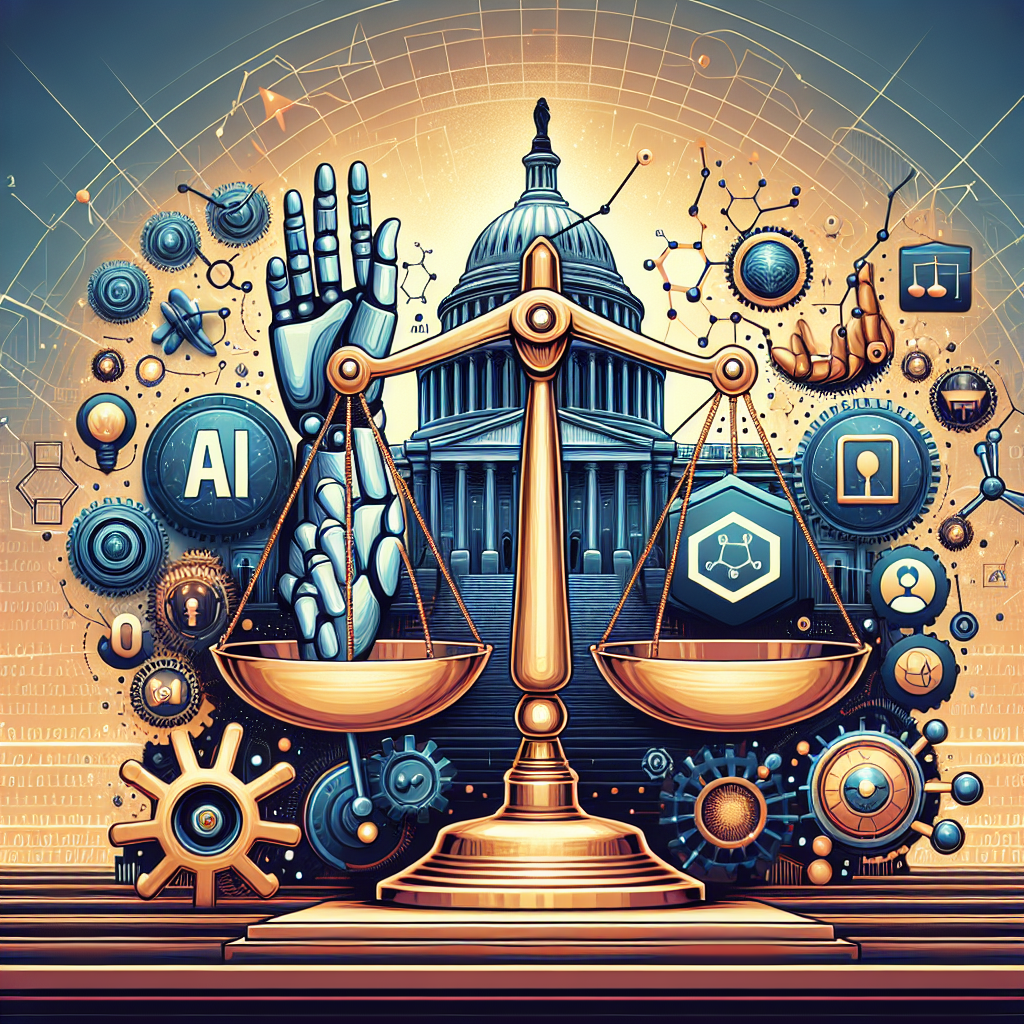

“Can Congress Tame AI? Navigating the Legal Maze of Artificial Intelligence Regulation.”

**Constitutional Limits on AI Regulation: Can Congress Legislate Without Overreach?**

The rapid advancement of artificial intelligence has prompted lawmakers to consider regulatory measures to address its societal impact. However, as Congress explores legislative frameworks for AI, it must navigate constitutional constraints that limit its authority. The challenge lies in balancing the need for oversight with the principles of federalism, free speech, and due process, all of which shape the boundaries of permissible regulation. While Congress has broad powers under the Commerce Clause and other constitutional provisions, AI’s unique characteristics raise complex legal questions about the extent of federal authority and the risk of governmental overreach.

One of the primary constitutional considerations in AI regulation is the Commerce Clause, which grants Congress the power to regulate interstate commerce. Given that AI technologies are developed, deployed, and utilized across state and national borders, they clearly fall within the scope of interstate commerce. This provides Congress with a strong foundation to enact laws governing AI-related industries, including data privacy, algorithmic transparency, and ethical standards. However, the challenge arises when regulations extend beyond commercial applications and into areas traditionally governed by state law, such as professional licensing, education, and law enforcement. If federal AI regulations encroach upon these domains, they may face legal challenges on the grounds of violating states’ rights under the Tenth Amendment.

Another constitutional issue involves the First Amendment, particularly in relation to AI-generated content and algorithmic decision-making. AI systems are increasingly used to create text, images, and videos, raising questions about whether such outputs constitute protected speech. If Congress enacts laws restricting AI-generated content—such as deepfake regulations or misinformation controls—courts may need to determine whether these measures infringe upon free speech rights. Additionally, AI-driven recommendation algorithms, which influence public discourse by curating online content, could be subject to regulatory scrutiny. However, any attempt to impose content-based restrictions must withstand strict judicial review to ensure compliance with First Amendment protections.

Beyond speech concerns, AI regulation also implicates due process rights under the Fifth and Fourteenth Amendments. Many AI systems are used in high-stakes decision-making, such as hiring, lending, and criminal justice. If Congress mandates specific AI governance frameworks, it must ensure that individuals affected by AI-driven decisions have adequate procedural safeguards. For instance, if an AI system denies a person a loan or employment opportunity, due process principles may require transparency, an opportunity to contest the decision, and a mechanism for redress. Without such safeguards, AI regulations could face constitutional challenges for failing to protect individuals from arbitrary or biased decision-making.

Moreover, the nondelegation doctrine presents another potential hurdle. This legal principle prohibits Congress from delegating its legislative authority to executive agencies without clear guidelines. Given the complexity of AI, lawmakers may be tempted to grant broad regulatory discretion to agencies such as the Federal Trade Commission or the Department of Commerce. However, if Congress fails to provide sufficiently specific standards, courts may strike down such delegations as unconstitutional. This underscores the need for carefully crafted legislation that balances flexibility with clear statutory limits.

Ultimately, while Congress possesses significant authority to regulate AI, constitutional constraints require a nuanced approach. Any legislative effort must carefully navigate federalism concerns, free speech protections, due process requirements, and limits on delegation. As AI continues to evolve, lawmakers must craft regulations that not only address emerging risks but also withstand constitutional scrutiny, ensuring that oversight does not come at the expense of fundamental rights.

**The Role of Federal Agencies in AI Oversight: Delegation or Abdication?**

As artificial intelligence continues to evolve at an unprecedented pace, the question of how to regulate it remains a pressing concern for lawmakers. While Congress has the authority to establish legal frameworks for AI governance, much of the responsibility for oversight has been delegated to federal agencies. This delegation raises important questions about whether such an approach constitutes a necessary division of labor or an abdication of legislative responsibility. Given the complexity and rapid development of AI technologies, federal agencies play a crucial role in interpreting and enforcing regulations. However, the extent to which they should be entrusted with this responsibility remains a subject of debate.

One of the primary reasons Congress relies on federal agencies for AI oversight is the technical expertise required to regulate such a complex field. Agencies such as the Federal Trade Commission (FTC), the Food and Drug Administration (FDA), and the National Institute of Standards and Technology (NIST) possess specialized knowledge that allows them to assess the risks and benefits of AI applications in various sectors. For instance, the FTC has taken an active role in addressing concerns related to AI-driven consumer protection issues, such as algorithmic bias and deceptive practices. Similarly, the FDA evaluates AI-powered medical devices to ensure they meet safety and efficacy standards. By delegating regulatory authority to these agencies, Congress can leverage their expertise to develop more informed and adaptive policies.

However, this delegation also raises concerns about accountability and democratic oversight. Unlike elected legislators, agency officials are not directly accountable to the public, which can lead to regulatory decisions that lack transparency or fail to reflect broader societal concerns. Additionally, agencies may struggle to keep pace with the rapid advancements in AI, leading to either overly restrictive regulations that stifle innovation or insufficient oversight that fails to address emerging risks. Critics argue that by relying too heavily on federal agencies, Congress may be avoiding its responsibility to establish clear legislative guidelines for AI governance. Without comprehensive federal laws, regulatory approaches may become fragmented, with different agencies applying inconsistent standards across industries.

Another challenge associated with agency-led AI oversight is the potential for regulatory capture, where powerful technology companies exert undue influence over the rulemaking process. Given the significant economic and political power of major AI developers, there is a risk that regulatory agencies may prioritize industry interests over public welfare. This concern is particularly relevant in areas such as data privacy, algorithmic transparency, and competition policy, where corporate lobbying efforts can shape regulatory outcomes. To mitigate this risk, some experts advocate for stronger congressional oversight of agency actions, including regular reporting requirements and independent audits of AI-related regulations.

Despite these challenges, federal agencies remain essential to AI governance, particularly in the absence of comprehensive legislation. While Congress has introduced various AI-related bills, few have been enacted into law, leaving agencies to fill the regulatory gap. Moving forward, a balanced approach may be necessary—one in which Congress establishes clear legal principles for AI oversight while allowing agencies the flexibility to implement and enforce these rules effectively. By striking this balance, lawmakers can ensure that AI regulation remains both adaptive and accountable, fostering innovation while protecting public interests.

**State vs. Federal Authority: Who Should Regulate Artificial Intelligence?**

The regulation of artificial intelligence presents a complex legal challenge, particularly when considering the division of authority between state and federal governments. As AI technologies continue to evolve and integrate into various aspects of society, lawmakers must determine the appropriate level of oversight while balancing innovation, economic growth, and public safety. However, the question of whether AI regulation should be primarily a federal responsibility or left to individual states remains a contentious issue, with arguments on both sides highlighting the benefits and drawbacks of each approach.

On one hand, proponents of federal regulation argue that AI is a national and even global issue that requires uniform standards to ensure consistency across industries and jurisdictions. Given that AI systems often operate across state lines—whether in healthcare, finance, or autonomous vehicles—having a patchwork of state regulations could create significant compliance challenges for businesses and developers. A federal framework would provide clarity, prevent regulatory fragmentation, and establish a cohesive approach to addressing ethical concerns, bias, and accountability in AI systems. Furthermore, because AI has implications for national security, economic competitiveness, and international relations, a centralized regulatory approach would allow the federal government to coordinate policies with global partners and set standards that align with international norms.

At the same time, there are strong arguments in favor of state-level regulation, particularly in light of the federal government’s historical challenges in keeping pace with rapidly advancing technologies. States have often taken the lead in regulating emerging industries, as seen in areas such as data privacy and environmental protections. By allowing states to craft their own AI regulations, lawmakers can tailor policies to the specific needs and concerns of their residents. Additionally, a decentralized approach encourages experimentation, enabling states to serve as testing grounds for different regulatory models. If certain policies prove effective, they could later be adopted at the federal level, creating a more informed and adaptable regulatory framework.

However, the potential for conflicting state laws raises concerns about regulatory uncertainty and economic inefficiencies. If each state enacts its own AI regulations, companies operating nationwide may face a complex web of compliance requirements, increasing costs and potentially stifling innovation. This issue is particularly relevant in industries such as autonomous transportation and healthcare, where AI-driven systems must function seamlessly across state borders. Without federal preemption, businesses may struggle to navigate inconsistent rules, leading to legal disputes and delays in AI deployment.

Despite these challenges, some legal scholars suggest a hybrid approach that combines federal oversight with state-level flexibility. Under this model, the federal government could establish baseline AI regulations, ensuring fundamental protections and ethical standards, while allowing states to implement additional measures tailored to their unique circumstances. This approach would provide a degree of uniformity while still enabling states to address specific concerns, such as labor impacts or consumer protections, in ways that reflect local priorities.

Ultimately, the question of whether AI regulation should be a federal or state responsibility remains unresolved, and the debate is likely to continue as AI technologies advance. While federal regulation offers consistency and global alignment, state-level initiatives provide adaptability and responsiveness. Striking the right balance between these approaches will be crucial in ensuring that AI development remains both innovative and ethically responsible while protecting the interests of businesses, consumers, and society as a whole.